In the fourth article ,we will see some more concepts of container networking.

Unix Daemon Sockets - Communication with Unix Daemon Sockets

Unix Daemon Sockets - Communication with Unix Daemon Sockets

Within a single host, UNIX IPC mechanisms, especially UNIX domain sockets or pipes,

can also be used to communicate between containers

Container to container communication in a POD

[root@manja17-I13330 kubenetes-config]# kubectl get pods

[root@manja17-I13330 kubenetes-config]# kubectl describe pod testing-service

The first two are the same containers that we started in the Pod. The third one is called the

[root@manja17-I14021 ~]# docker inspect a84bf753a617 | grep NetworkMode

We can see that both the container have the network mode pointing to the container with the id

Pod to Pod Communication - Same Node

can also be used to communicate between containers

Run the first container as,

docker run --name c1 -v /var/run/foo:/var/run/foo -d -ti docker.io/jagadesh1982/testing-service /bin/bash

And second container as,

docker run --name c2 -v /var/run/foo:/var/run/foo -d -ti docker.io/jagadesh1982/testing-service /bin/bash

Containers C1 and C2 can now talk on the unix socket. Apps running in both C1

and C2 can talk to each other by using the socket file /var/run/foo

and C2 can talk to each other by using the socket file /var/run/foo

Container to container communication in a POD

Every pod in kubernetes will have their own IP address. Containers with in a pod share network

namespace , so they can talk to each other on localhost. This is implemented by the network

container which acts a bridge to dispatch the traffic for every container in the pod. This is just

an extension of the Container network mode in Docker. Lets see how this is implemented in

kubernetes.

[root@manja17-I13330 kubenetes-config]# kubectl create -f basic-multi-container-pod.yml

pod "testing-service" created

[root@manja17-I13330 kubenetes-config]# kubectl get pods

NAME READY STATUS RESTARTS AGE

testing-service 2/2 Running 0 9s

[root@manja17-I13330 kubenetes-config]# kubectl describe pod testing-service

Name: testing-service

Namespace: default

Node: manja17-i14021/10.131.36.181

********

IP: 10.38.0.1

Containers:

test-ser:

Container ID: docker://a84bf753a617a54bb9891ceccaca595ecc154a05731faf48a25a87165ae8d036

shell:

Container ID: docker://3bf5128f6603594f60c88972f6881d750c22d18aef28f85b18a5f19131a42549

*******************

Once we describe the pod, we can see both the container with their container Ids even.

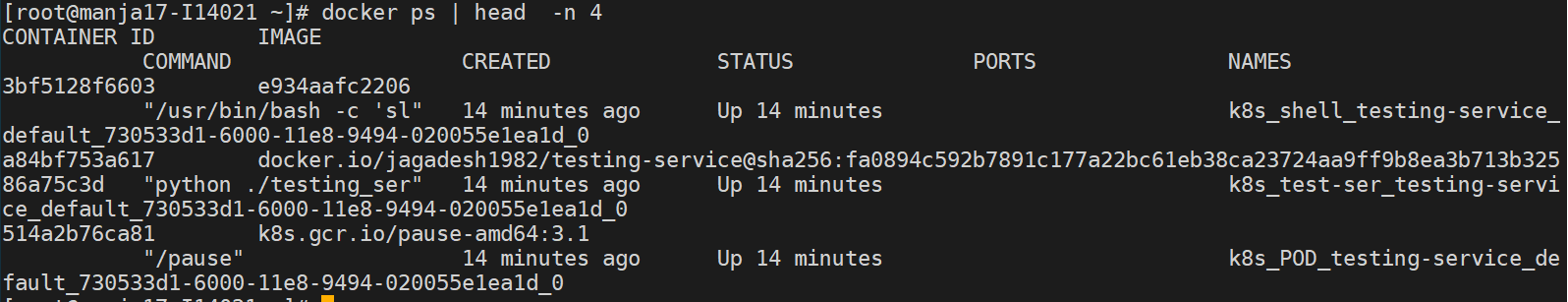

In the above case we have 2 containers test-ser and shell. Now the pod is created in the node manja17-i14021/10.131.36.181”. Login to to the node and run the docker ps to the most recent container created,

From the above image we have 3 containers created at the same time which are,

3bf5128f6603

A84bf753a617

514a2b76ca81

The first two are the same containers that we started in the Pod. The third one is called the

network container. Before going too deep into what this container is ,first lets see the network

mode for the first 2 containers. We can run

[root@manja17-I14021 ~]# docker inspect 3bf5128f6603 | grep NetworkMode

"NetworkMode": "container:514a2b76ca81521c7190d15604a846111e39cc72201b980f71c7044e865f2341",

[root@manja17-I14021 ~]# docker inspect a84bf753a617 | grep NetworkMode

"NetworkMode": "container:514a2b76ca81521c7190d15604a846111e39cc72201b980f71c7044e865f2341",

We can see that both the container have the network mode pointing to the container with the id

514a2b76ca81. So network for both container is being provided by this network container. This

is called as the pause container in kubernetes.

Pause Container

Running multiple pieces of software in one single container is always a problem , so people felt

running multiple containers which are partially isolated and share and environment. In general

we have to create a parent container and use correct flags for setting to the new container that

share the same environment.

Pause container serves as the parent container for all containers in a Pod. It has the main

responsibility of sharing a network space between the 2 containers in the Pod. The Pause

container does nothing use full.

Lets say if any of the container in the pod goes down and another replica is up and running, how

will the network from the old container be moved to the new replica of the container. Who will

remember these network setting. These things are done by the pause container. This Pause

container works as below for containers inside a Pod,

Pod to Pod Communication

Pod IP address are always accessible from other pods no matter where they are either in the same node or other node.

Pod to Pod Communication - Same Node

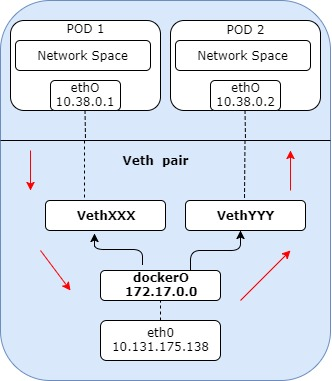

The dockerO bridge plays an important part here. Pod to Pod communication with in the same node goes through the bridge by default.

[root@manja17-I13330 kubenetes-config]# kubectl get pods

NAME READY STATUS RESTARTS AGE

testing-service-hk9nh 1/1 Running 0 11s

testing-service-mgsgl 1/1 Running 0 11s

[root@manja17-I13330 kubenetes-config]# kubectl describe pod testing-service-hk9nh | grep Node

Node: manja17-i14021/10.131.36.181

Node-Selectors: arch=arm

[root@manja17-I13330 kubenetes-config]# kubectl describe pod testing-service-hk9nh | grep IP

IP: 10.38.0.2

[root@manja17-I13330 kubenetes-config]# kubectl describe pod testing-service-mgsgl | grep Node

Node: manja17-i14021/10.131.36.181

Node-Selectors: arch=arm

[root@manja17-I13330 kubenetes-config]# kubectl describe pod testing-service-mgsgl | grep IP

IP: 10.38.0.1

[root@manja17-I13330 kubenetes-config]# kubectl exec testing-service-mgsgl -it -- bash

root@testing-service-mgsgl:/usr/src/app# ping 10.38.0.2

PING 10.38.0.2 (10.38.0.2): 56 data bytes

64 bytes from 10.38.0.2: icmp_seq=0 ttl=64 time=0.294 ms

64 bytes from 10.38.0.2: icmp_seq=1 ttl=64 time=0.091 ms

We can see that from containers inside a pod,we can talk to any other pod. This works in the

same way as how containers on different nodes talk using the dockerO and veth Bridges as

below,

When the first pod want to talk to second pod, the packet passes through the Pod1 namespace

and reach the VethXXX allocated to the Pod1. From here it goes to the bridge dockerO and

eventually dockerO broadcast the destination IP and help the packet reach to the VethYYY.

The Packet finally reaches the Pod2. SInce the Pods are in same host , the main network interface ethO will not be involved.

If we look at the iptables ,we can see a masquerading rule on the host that docker creates for

the outbound traffic which will make the internet traffic available for the containers.

[root@manja17-I13330 ~]# iptables -t nat -nL POSTROUTING

Chain POSTROUTING (policy ACCEPT)

target prot opt source destination

MASQUERADE all -- 172.17.0.0/16 0.0.0.0/0

Similarly when we start a container and map its port to the host , Docker dynamically adds the

routes to the iptables. Lets start a container with mapping ports like,

[root@manja17-I13330 ~]# docker run -d -p 8080:9876 docker.io/jagadesh1982/testing-service

ab1347f7ca57122444eeb2185e121b4faf64a7f8ef742407d1cd43c8948adb48

[root@manja17-I13330 ~]# docker ps | head -n2

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

ab1347f7ca57 docker.io/jagadesh1982/testing-service "python ./testing_ser" 17 seconds ago Up 16 seconds 0.0.0.0:8080->9876/tcp stupefied_colden

[root@manja17-I13330 ~]# iptables -t nat -nL | grep DOCKER

DOCKER all -- 0.0.0.0/0 0.0.0.0/0 ADDRTYPE match dst-type LOCAL

DOCKER all -- 0.0.0.0/0 !127.0.0.0/8 ADDRTYPE match dst-type LOCAL

Chain DOCKER (2 references)

[root@manja17-I13330 ~]# iptables -t nat -nL | grep 8080

DNAT tcp -- 0.0.0.0/0 0.0.0.0/0 tcp dpt:8080 to:172.17.0.3:9876

We can see that the container mapped port 9876 from the IP 172.17.0.3 is now being listened

on the host tcp dpt:8080.

Great collection .Keep updating Devops Online Training

ReplyDelete